OKRs: Delivery Results, Adoption Results, and Impact Results

Years ago I wrote about identifying and focusing on the key metrics that make your product successful as opposed to focusing on a roadmap of tasks.

As Stitch Fix has grown to more than 35 cross-functional product teams we needed a structured goal setting process to make sure we’re all working together to achieve our company goals. About two years ago we began using Objectives and Key Results (OKRs) as the primary way we ensure teams focus on impact. OKRs create clarity within our teams, alignment across our teams, and autonomy for our teams.1

Moving from a roadmap-focused view of the world (the features we’re going to deliver this quarter) to an impact-focused view of the world (the outcomes we are going to drive this quarter) can be hard. Early in that transition I’ve often seen teams share “Key Results” that are really just a list of tasks they’re going to complete.

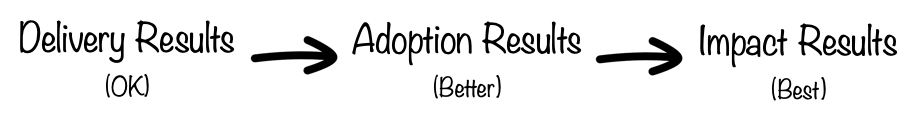

One technique I’ve found for better key results is to think of three types of results:

Each type of key result can be valuable, but the ones to the right are better than the ones to the left. By thinking about what type of result we’re using we can better achieve our objectives.

Delivery Results

Delivery Results are the most straightforward. These are results of the format “Ship X by Y date”

Sometimes this is the best that we can do. We may have no idea what the impact will be, or there may be a hard compliance requirement that simply must happen by a given date.

An example of a delivery result is “Launch a shopping cart to enable multi-item orders by May 31st”

However, in the vast majority of cases I think we can do better.

Adoption Results

Adoption results move from ensuring that something is shipped to that something is used.

This is an improvement because it focuses the team on ensuring that what they built is good enough to be used.

An example of a adoption result is “80% of purchase orders created using new tool”

Impact Results

Impact results move from measuring whether something is used to whether it created value.

This is an improvement because you’re now directly measuring metrics that drive outcomes. Without impact results you run the risk of building something that is good enough to be used but does not have the impact you intended.

An example of an impact result is “Increase 7-day signup to first purchase conversion rate by 10%”

Example

Let’s look at a concrete example. Imagine an engineering team focused on building the platform used by other engineers at the company to run applications.

They have the following objective:

Objective: Make it easy for developers to provision the correct amount of server resources

The simplest way to write a result would be a delivery result:

Objective: Make it easy for developers to provision the correct amount of server resources

Key Results:

- Deploy autoscaling capability

We could do better by re-working the result to be an adoption result:

Objective: Make it easy for developers to provision the correct amount of server resources

Key Results:

- 85% of apps have enabled autoscaling capability

And we can do even better by writing impact results:

Objective: Make it easy for developers to provision the correct amount of server resources

Key Results:

- 25% reduction in Aggregate Container Memory Usage

- Reduce Out of Memory Errors from 30 per month to 0.

Impact Results Increase Clarity

Impact results increase clarity within a given team. In the delivery and adoption results examples above there is a fair amount of scope ambiguity. It isn’t clear to the team what can be cut and still count as “autoscaling capability”.

The impact result makes tradeoffs much easier: is a given feature necessary to achieve the results? If not, it can be dropped without jeopardizing the overall objective.

Impact Results Increase Alignment

The greater clarity driven by impact results also helps improve alignment across teams. Maybe when the product engineering teams see those results they say “we don’t have a problem with overprovisioning, what we have a problem with is we’re spending way too much time manually adjusting capacity”. Then a conversation will happen where the results can be improved to better match the core of the objective.

Impact Results Increase Autonomy

Finally, impact results allow teams to find creative ways of changing course to better deliver value.

Imagine the team above is using the delivery result. They’ve pre-suppposed the solution and will focus on building the autoscaling capability to the exclusion of considering other options. There may be iterative development and releases, but the team’s “success” or “failure” will be defined as “did you complete this spec?”. Ship the whole thing on the last day of the quarter and they “achieved their result” even though there has been no concrete improvement towards in the overarching objective. Deviations from shipping the defined autoscaling capability will be avoided.

The adoption result encourages slightly better behavior. If half of the applications can get moved over without every feature in the full system being built maybe they will get migrated earlier.

But the best is the impact result because it encourages the team to focus on creating real value. If, as part of creating the autoscaling system the team first audits resource consumption, they may then realize they can manually adjust capacity to the proper levels for over or underprovisioned apps before they’ve built out the autoscaling system. This would drive reduced resource usage (and cost) and reduced out of memory errors (and better reliability) while the team is building out the full autoscaling system.

Always Be Improving

Delivery results and adoption results aren’t inherently bad and you shouldn’t be afraid to use them. But in most cases we can better achieve our objectives by asking how we’d move one step further along that continuum towards directly measuring impact.

If you’re using delivery results then ask how you can turn them into adoption results. If you’ve defined adoption results then ask how you could change them into impact results. Follow those steps regularly and your teams will achieve better results faster.

-

If you’d like to learn more about OKRs Measure What Matters is a great book detailing OKRs. If you don’t want to read an entire book—even though it is a very quick read—you can probably get 80% of the benefit by reading Google’s 6-page OKR Playbook. ↩